Recent revelations of inflated SAT scores, retention rates and other information have cast doubt on the validity of college ranking systems and raised concerns over how colleges manage and report their data.

Last month, Emory University admitted to inflating its average student SAT scores by reporting the higher scores of admitted students rather than those who enrolled. They did this to boost their ranking in magazines like U.S. News and World Report for at least 12 years.

Prior to this, two other schools had been caught in similar ranking scandals. Claremont McKenna College in California admitted in January to inflating average SAT scores since 2005 and, in November, Iona College in New Rochelle, N.Y., conceded it had misreported a host of data, including average test scores, graduation rates and acceptance rates.

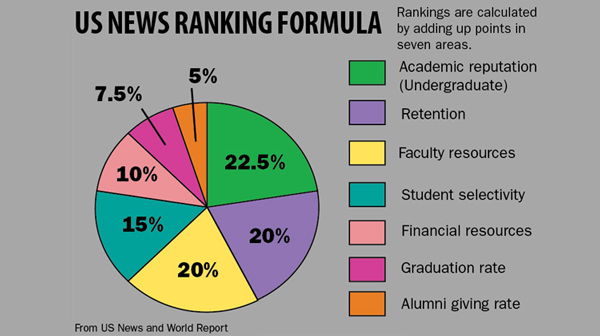

To compile its list of the best schools in the country, U.S. News and World Report measures criteria such as a college’s reputation, student selectivity, retention and faculty resources and then assigns a score. The magazine ranks Ithaca College as the 11th best regional university in the northern U.S.

U.S. News and World Report could not be reached for comment.

Martha Gray, director of institutional research at Ithaca College, said U.S. News and World Report sends the college written questions and her office compiles data from different divisions and departments throughout the college. Gray said multiple people review the information provided to her office against available data and numbers from previous years to make sure it is correct and consistent.

Gray said it would be difficult for information to be tampered with because raw data is available to all departments. Also, information indicating a significant change is thoroughly examined.

“There’s not a single individual person that’s controlling the release of that information,” Gray said. “Multiple eyes see it, there’s multiple checks and balances to make sure that when we run the statistics the numbers look consistent with what the other division is getting.”

As of this year, Ithaca College will no longer require prospective students to submit any standardized test scores as part of their application in an attempt to broaden the applicant pool.

Jeffrey Stake, a law professor at Indiana University, said while the scandals of the last year are troubling, the larger problem lies in the methodology used in rankings like that of U.S. News and World Report.

“Of course self-reported data is problematic,” Stake said. “But I wouldn’t worry about that as much as I worry about the fact that anybody who ranks schools is ranking them with weights that are basically arbitrary.”

Stake said he is critical of the magazine’s reliance on factors like reputation, which are based on the previous year’s ranking and provide little to no real information. They also rely on SAT scores, which may not reflect the quality of undergraduate experience.

“The reputation is almost useless because in the college world what you want to know is how good is a school in the kind of thing I’m going to be doing,” Stake said. “Unless a student has almost no interest in any particular subject, it would not be well-advised for any student to be guided by U.S. News.”

Other magazines use different methodologies to rank colleges. Forbes Magazine, for example, examines student success using information from the Integrated Postsecondary Education Data Systems and measures criteria such as student satisfaction, success after graduation and accumulated debt. On Forbes’ list of 650 schools it considers to be the best, Ithaca College ranks 333rd.

Michael Noer, executive editor of special projects at Forbes, said the recent college scandals are indicative of the value placed on yearly rankings.

“It’s very much in these colleges’ interest to try very hard to move up these rankings,” Noer said. “It helps you recruit students, which are frankly customers.”

The Princeton Review relies on administrative information in selecting what schools will be included in its books, but it does not rank schools numerically themselves. Rather, it provides the top 20 schools in 62 categories based on the responses of more than 120,000 enrolled college student responses.

David Soto, director of content at The Princeton Review, said the colleges that provided forged data should be considered exceptions and not the rule. The company goes through a rigorous review process to make sure the data provided is honest and up to date, he said.

“They are outliers in most of the dealings we have with schools,” Soto said. “By and large the data that schools are reporting is honest and reflective of what’s happening on the campus.”

Noer said the rankings should act as a guidepost for prospective students in applying to the right college, and there are multiple personal factors that go into that decision.

“What’s right for them depends on what they want to study, what they want to do with their lives, what they can afford and where they can get in,” Noer said.

Stake said the ranking system does a disservice to prospective students and said there are more helpful ways to release data regarding colleges.

“Don’t put any rank on it,” Stake said. “Don’t add up factors and say this is the winner according to these factors because that just misleads people into thinking that those are the right weights. Provide the data and let people make up their own mind.“